I’ve been learning about PBR recently. When using IBL to calculate the diffuse term part, I have some doubts. When calculating the spherical coordinates, I found that most of article are in the z up direction, but in threejs it is in the y up direction. The azimuth is defined as starting from +z. I tried to convolve envMap based on this benchmark, but I got the wrong image. Can someone explain it to me?

result:

Here are the modifications I made:

vec3 sphericalEnvmapToDirection(vec2 tex) {

float theta = PI * (1.0 - tex.t);

float phi = 2.0 * PI * (0.5 - tex.s);

// return vec3(sin(theta) * cos(phi), sin(theta) * sin(phi), cos(theta));

return vec3(sin(theta) * sin(phi), cos(theta),sin(theta) * cos(phi));

}

vec2 directionToSphericalEnvmap(vec3 dir) {

// float phi = atan(dir.y, dir.x);

// float theta = acos(dir.z);

float phi = atan(dir.x, dir.z);

float theta = acos(dir.y);

float s = 0.5 - phi / (2.0 * PI);

float t = 1.0 - theta / PI;

return vec2(s, t);

}

and in for loop

vec3 prefilterEnvMapDiffuse(in sampler2D envmapSampler, in vec2 tex) {

float px = t2p(tex.x, width);

float py = t2p(tex.y, height);

vec3 normal = sphericalEnvmapToDirection(tex);

mat3 normalTransform = getNormalFrame(normal);

vec3 result = vec3(0.0);

uint N = uint(samples);

for(uint n = 0u; n < N; n++) {

vec3 random = random_pcg3d(uvec3(px, py, n));

float phi = 2.0 * PI * random.x;

float theta = asin(sqrt(random.y));

// vec3 posLocal = vec3(sin(theta) * cos(phi), sin(theta) * sin(phi), cos(theta));

vec3 posLocal = vec3(sin(theta) * sin(phi), cos(theta),sin(theta) * cos(phi));

vec3 posWorld = normalTransform * posLocal;

vec2 uv = directionToSphericalEnvmap(posWorld);

vec3 radiance = textureLod(envmapSampler, uv, mipmapLevel).rgb;

result += radiance;

}

result = result / float(N);

return result;

}

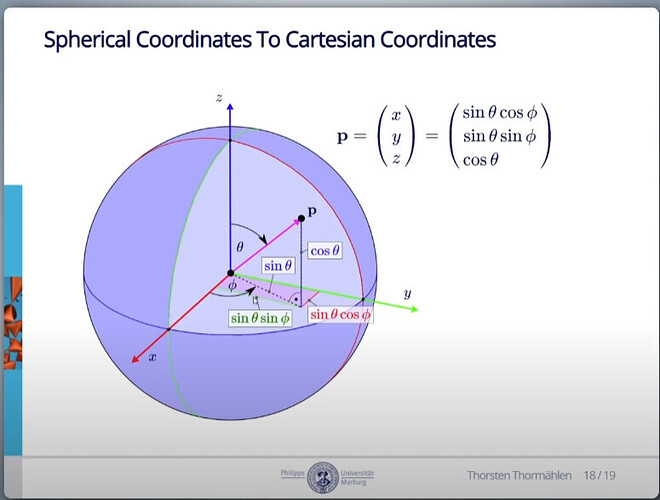

This is the axis definition used in most page and literature:

I made an example for anyone to debug:demo

To see the results, click the play button in Time Control.

if u want to modify shader, can do that

select shader node and click edit code button