[gonna read this tomorrow and edit probably, bedtime now]

btw this post is my wip to gather some best practices, not a response to to anything or anyone specific, probably half of the content is directed to my future self or any interested onlookers and half to popov

–

not mixing up! I used a 3d texture as an example because we could theoretically split a 3d texture into layers based on z coordinate and operate independently on each xy partition. If the declaration of workgroup_size could have any benefit, i just thought this could be a scenario to answer the question “Is there a difference between (8,8,1) vs 64?” Maybe someone else can think of a scenario that should (theoretically, not necessarily in reality) have potential to benefit from being able to declare a 2d or 3d workgroup size?

–

see, it is a good example ![]() You made it better though. We have several scenarios now.

You made it better though. We have several scenarios now.

1 - workgroup size of (32,1,1), Dispatch with parameters (16,1,1) - your original scenario

2 - workgroup size of (8,8,1), Dispatch with parameters (8,1,1) - same, account for amd

3 -workgroup size of (64,1,1), Dispatch with parameters (8,1,1) - same, but 8,8,1 vs 64

4 - workgroup size of (4,1,1), Dispatch with parameters (4,4,4) - poorly chosen workgroup size

5a - workgroup size of (16,8,4), Dispatch with parameters (1,1,1) - your final suggested scenario

5b - workgroup size of (16,8,4), Dispatch with parameters (1,1,1) - your final suggested scenario but remove any shared memory in shader

5c - workgroup size of (64,1,1), Dispatch with parameters (2,1,1) - your final suggested scenario, with shared memory as in 5a, but better sized workgroup considering shared memory. amd specific

5d - workgroup size of (32,1,1), Dispatch with parameters (2,1,1) - your final suggested scenario, with shared memory as in 5a, but better sized workgroup considering shared memory. nvidia specific

6 - workgroup size of (1024), Dispatch with parameters (1,1,1) - easy optimization test, no shared memory.

my expectations from those scenarios based on everything discussed so far:

1 - as fast as 2 and 3 on nvidia, much slower than 2 and 3 on amd.

2a - faster than 1 on amd. (proving amd with workgroup_size(64) is faster than workgroup_size(32)) - not actually sure if amd does or doesnt do well with workgroup_size(32) here, we should test a certainly bad workgroup size too.

2b - same perf as 3, proving (proving 8,8,1 vs 64 doesnt matter)

3 - same perf as 2 (proving 8,8,1 vs 64 doesnt matter)

4 - much worse performance than all other scenarios

5 - 5a will be slower than 5b, 5c, and 5d who all perform the same

6 - performs same as 2 and 3

–

Sorry, I was unclear. I equate workgroup with warp in my mind, but I should have said “For shared memory, the warp/wave workgroup has shared local memory (atomics) within the warp/wave workgroup, but i think multiple warp/wave workgroups also potentially get shared memory up to 1024 threads (32 warps or 16 waves) when using webgpu (aka maxComputeInvocationsPerWorkgroup).”

Anyway, I see what you mean. But also, id say the scope of shared memory is determined at dispatch (compiling a shader doesn’t allocate). Also, dispatch count is variable since its based on the end-user’s hardware and i guess i think of that as the top level context parameter.

–

i think SM is just the CUDA term for maxComputeInvocationsPerWorkgroup or like, how many compute units use the same memory stick. Can we restate your second bullet as "a compute shader shouldn’t use more memory than:

maxComputeWorkgroupStorageSize / ( maxComputeInvocationsPerWorkgroup / (32 or 64).

Finally, i think we should think about the problem in the context of actual hardware layout. these diagrams that i copy pasta’d from google images are a nice visual aid. I probably dont understand more than 1% of what these pictures imply, but still helpful to see different chip layouts and the physical access the compute units have to the same memory stick. this is my understanding of maxComputeInvocationsPerWorkgroup in picture form.

nvidia data center gpus

nokia phone

amd

apple

key takeaways (pending review!)

<insert notable scenario outcome>

(highly questionable hot take by me here)

If no shared memory besides atomics local to workgroup invocation, choose lesser of maxComputeInvocationsPerWorkgroup and maxComputeWorkgroupSizeX as 1d max workgroup_size and dispatch 1, the compiler should be able to optimize this.

(restating @Evgeni_Popov’s last bullet)

Compute shader shouldn’t use more memory than maxComputeWorkgroupStorageSize / ( maxComputeInvocationsPerWorkgroup / (32 or 64).

(webgpufundamentals).

Choose a workgroup size of 64 unless you have some specific reason to choose another size

Uniformity analysis explanation:

@arcman7 regarding texture vs storage, it seems that textures are always filtered, so image or storage is faster. However, there is a vulkan extension to read textures without sampling.

—-

Quick notes

Amd dev advocate said 32 wavefront is native and 64 causes 32x2 in lockstep on rdna2. If same for rdna3 , reevaluate assumption 64 is best, bc amd has 2 paths and webgpu driver could choose 64 and be perf loss

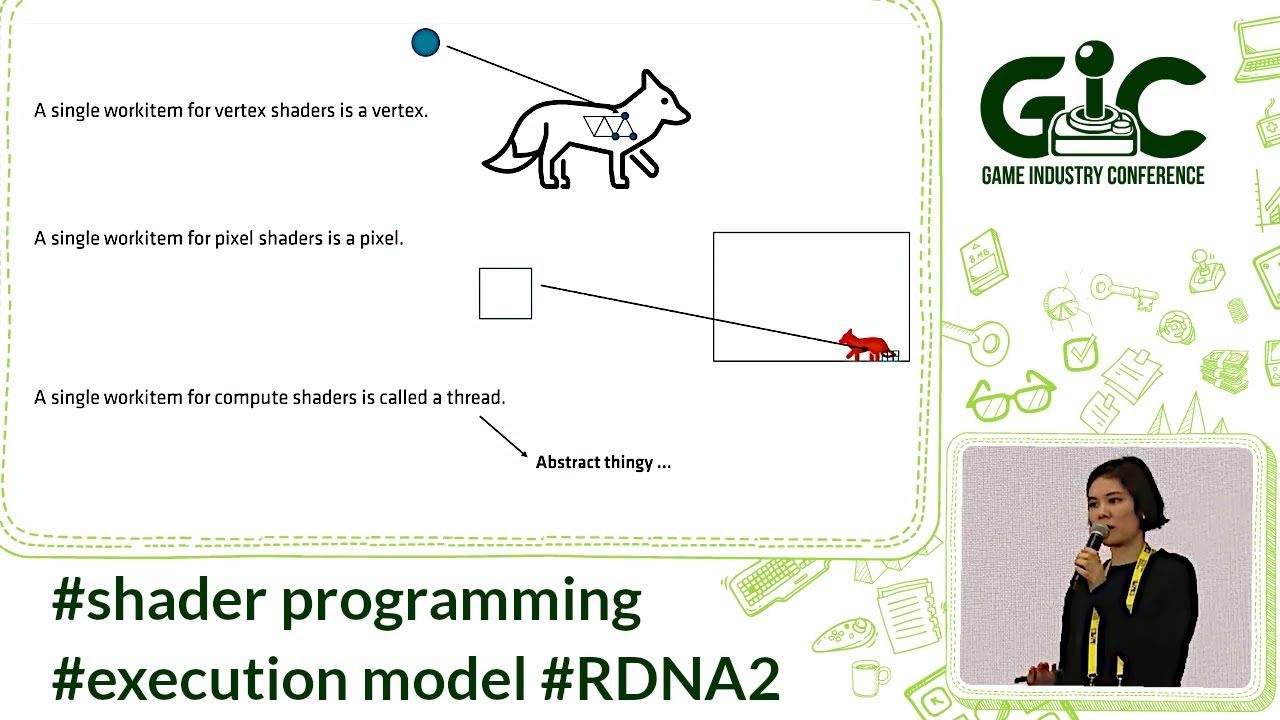

@Evgeni_Popov around 23 mins she says 64x1 vs 8v8 can be significantly different because it allows different access patterns which effects cache layout. At 48 mins she answers a question about use case for 3rd dim workgroup size. Lol she answered the question and says it can impact performance and he goes “hehe.. why” .