See the current status of WebGPU implementation and words about performance here: WebGPU Status | Babylon.js Documentation

The problem is that there are still API calls to the browser and those are taking time. In WebGL2, with VAO, you have a single call to setup the vertex and index buffers. In WebGPU you have a call to set the index buffer and one or more calls to setup the vertex buffers (on average 3 calls if you have position, normals and uvs). In addition to these calls, you need to call setPipeline, setBindGroup (possibly several times) and draw. So, for each mesh, it’s between 6 and 10 API calls you need to issue to draw it. Another problem is that the philosophy/design of the new API is completely different from the one of WebGL. As we need to be backward compatible and want everything currently working in WebGL to also work in WebGPU, we have a number of constraints to deal with that we would not have if we started a new engine from scratch.

Note that your test scene is not really suitable for perf testing because there are too many individual objects, you kill the fps only because the javascript side must handle all these objects (independent of WebGL or WebGPU): it must collect them, compute the world matrices, recompute the bounding boxes and display them. It also ends up issueing (tens of) thousands draw calls per frame which is not really sustainable. There’s nothing related to the gfx API in the top slowest functions (the “(anonymous)” line is the frame function of the PG):

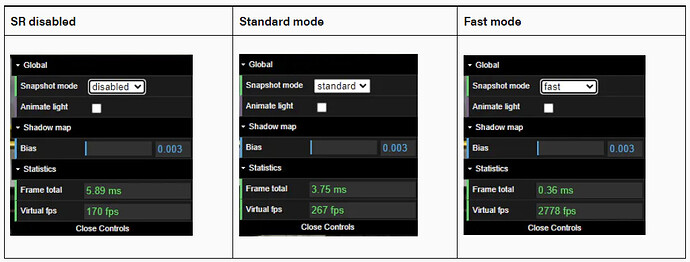

There are ways to improve performances, and the main one is using bundles to wrap all the API calls needed to draw a mesh. We have added a new snapshot rendering mode (only available in WebGPU), see this doc page that are using bundles. Depending on your scene, it can help a lot with performances, especially when using the fast mode. From the doc (SR means “snapshot rendering”):

This is with a real scenery, namely moving around in a power plant and using shadow mapping.

Snapshot rendering has some drawbacks, however, it can apply only on specific cases (see doc), that’s why we are currently working on another mode called compatibilityMode (engine.compatibilityMode): when set to false, we will switch to a mode where we record a bundle for each mesh we draw and we will reuse this cached bundle in subsequent frames.

We are trying to make this mode work as broadly as possible (so with as few constraints as possible for the user), the difficulty being to update the bundle in cache when necessary, but trying to do it as infrequently as possible (because rebuilding the bundle + drawing it is slower than simply drawing the mesh). It’s a work in progress, don’t try to enable it in production, you won’t see any difference and you will instead have rendering artifacts (depending on your scene).

If we are succesful with this compatibilityMode (which we hope!), we expect to have the same level or better performances than WebGL in real world scenarios.