Ugh, my brain melted while trying to write something coherent here, please excuse if it isn’t. Thanks for your patience. Too many new concepts recently in hobby game dev time

I wasn’t understanding where the ‘contextual’ is coming from; it couldn’t be the ‘first’ geometry in the scene because in geometry editor we can have many top-level geometry emitting nodes.

And because of my other experiences with graphs (e.g. DAGs) I wasn’t expecting backpropagation / lookaheads to derive values. That’s what I meant with:

because their descendant nodes don’t converge until later at the Set positions node.

You answered this for my example playground:

the Normals contextual will pick the new normals from the geometry and you can continue your build-up

and

[…] In that context, normals refer to geometry2.5 normals

But I just didn’t understand the logic for how the normals (and other) contextuals were being resolved.

Seeing this doc section helped:

A contextual value is derived from the closest geometry node associated with this node.

[…]

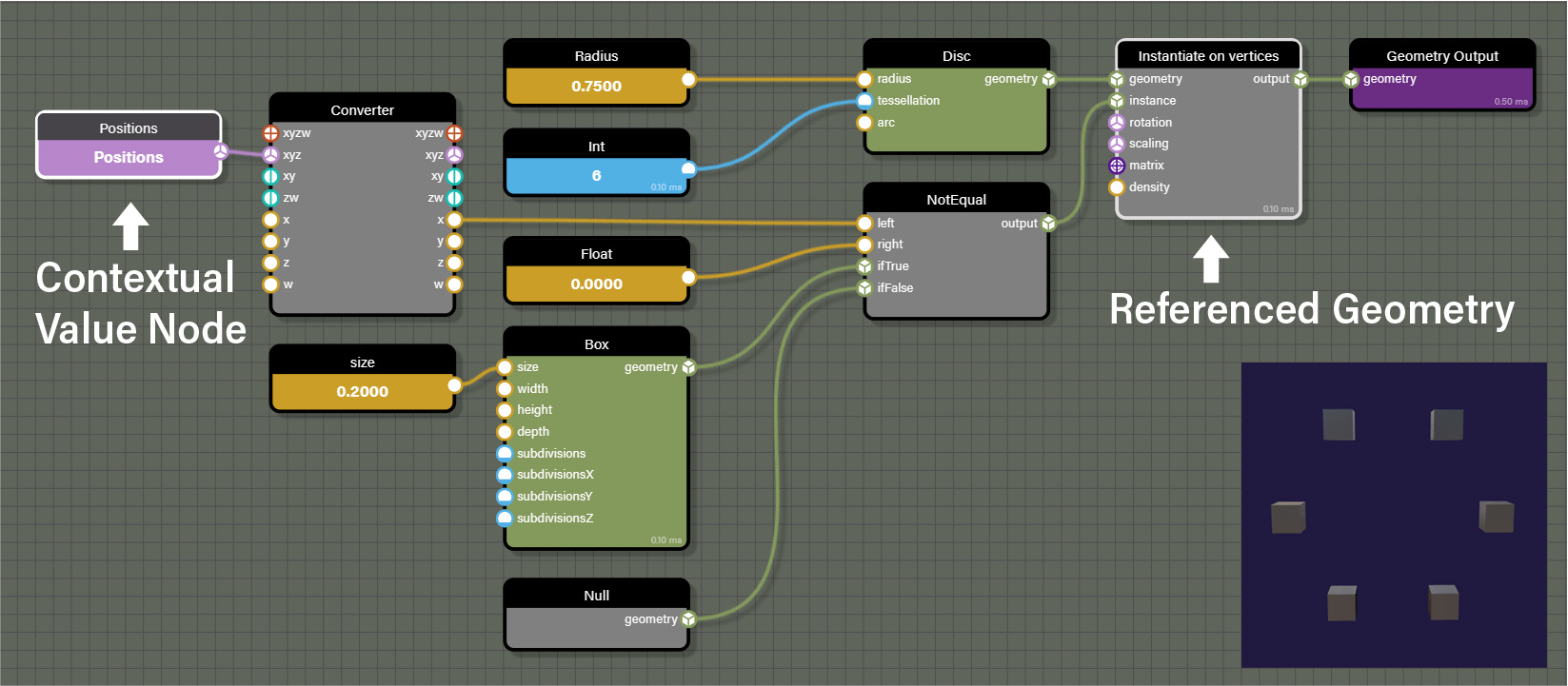

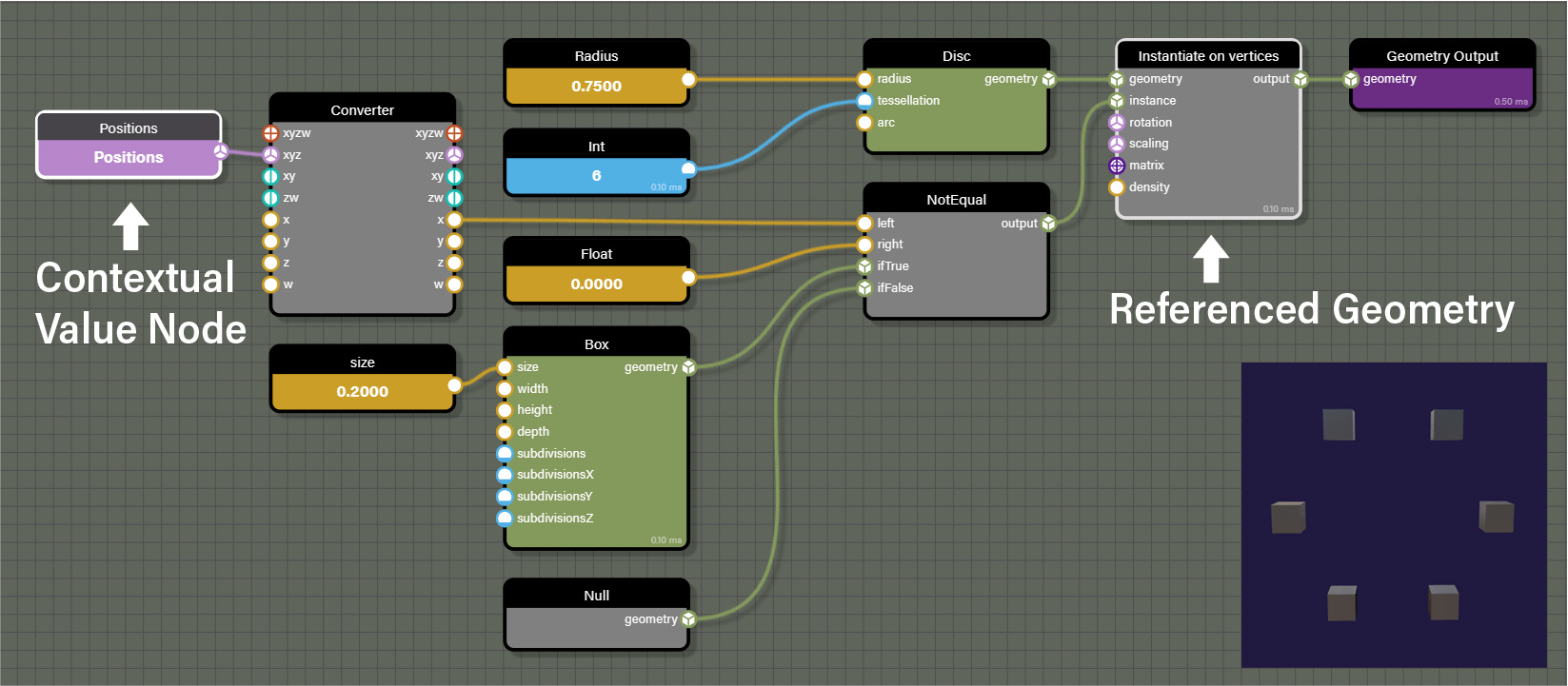

So I made this to understand it better:

It’s a playground using colors instead of normals, where colors are initially set for two separate geometries and then updated for an aggregate geometry. The color in the final Set Color does not reference the original geometries (where only one color is used in each) but the aggregate geometry (where colors are defined for all vertices). (This playground might not actually prove anything if the data structure underneath isn’t what I think it is.)

My initial assumption using the editor was that we could explicitly decompose and compose geometry on the fly, like this mockup (recompose not shown but would perform the inverse):

(subsequent transform nodes would apply per vertex)

I don’t know if this would be more capable than the current pattern, just wanted to explain my mental model. It’s not a feature request yet because I don’t know if it even works with the underlying design pattern and I’m still a noob to using NGE anyway.