Hey everyone! ![]()

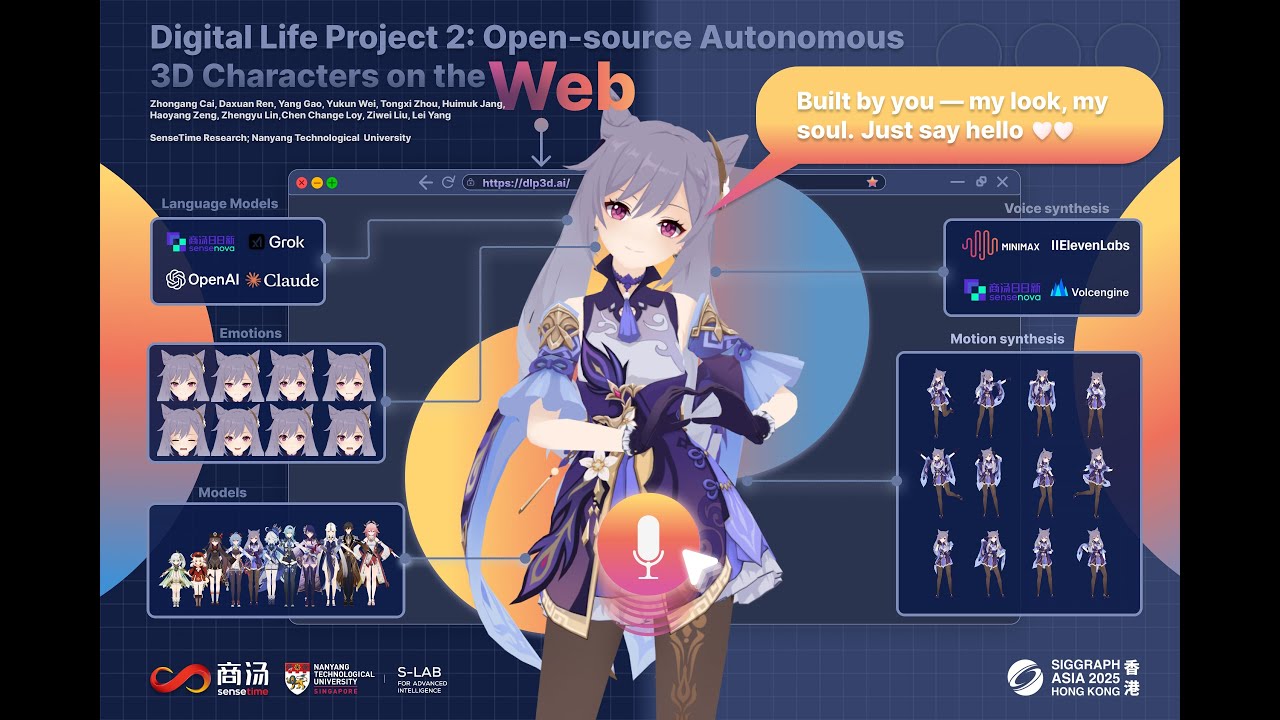

We’re excited to share DLP3D, an open-source project that brings autonomous, interactive 3D digital humans to the browser — powered by Babylon.js.

What is DLP3D?

What is DLP3D?

DLP3D is a full-stack system designed for real-time AI-driven character interactions.

It consists of three main parts:

-

Web App. A browser-based interface where you can customize and chat with 3D avatars. Each character is fully configurable — from 3D models and voices to prompts and environments.

-

Orchestrator. The “brain” of the system that connects speech recognition (ASR), large language models (LLMs), text-to-speech (TTS), emotion analysis, and animation generation (Audio2Face & Speech2Motion).

-

Backend & Cloud Services. Handles authentication, AI service connections, and asset delivery across the platform.

Core Features

Core Features

-

Real-time AI Chat. Just hold the mic button and talk with your AI character!

-

Runtime Animation Pipeline. Streams facial expressions, voice, and full-body motion in real time.

-

Adaptive Motion Blending & Connection Recovery. Keep everything smooth and responsive.

-

Stateful Chat Management. An FSM governs the entire chat flow, ensuring stable transitions and consistent behavior.

-

Cloth Simulation. Real-time physically based cloth dynamics for responsive, collision-aware garment motion.

-

Efficient Caching. Reuses common assets and minimizes redundant loading for faster performance.

Try it out!

Try it out!

Github: GitHub - dlp3d-ai/dlp3d.ai: Open-source Autonomous 3D Characters on the Web

Live Demo: https://dlp3d.ai

Note

Note

DLP3D is an academic project and will be showcased at SIGGRAPH Asia 2025 Real-Time Live!. While it’s released under the MIT License, please respect the licenses of any third-party assets used. Some demo materials include Genshin Impact characters by HoYoverse (MiHoYo), used under their fan content policy for non-commercial, educational purposes.