As I said, before, I found the primary cause to be a frame to frame issue where. No matter how still you keep your head, the rotation of the camera oscillates & giving the appearance of an aliasing problem. Anti-aliasing is contained on a single frame. I am not sure how well single frame solutions are going to work.

I ran into this at the end of last year. I had some smooth shaded chrome meshes, and I had more than edge problems, because the chrome was highly reflective, the shake of the camera made the interior looked pretty terrible as well. I tried a number of things.

First, I tried to replace the sub-cameras of the XR camera & tried to dampen the minor position & rotation changes, but it always seemed that I got really good gains only after side effects started to appear.

Fortunately, I found the PBR material property specularAntiAliasing. This made the chrome look mostly white at a distance, but that is mostly what a browser showed from far away as well. I still had edge issues, but the interior looked now acceptable.

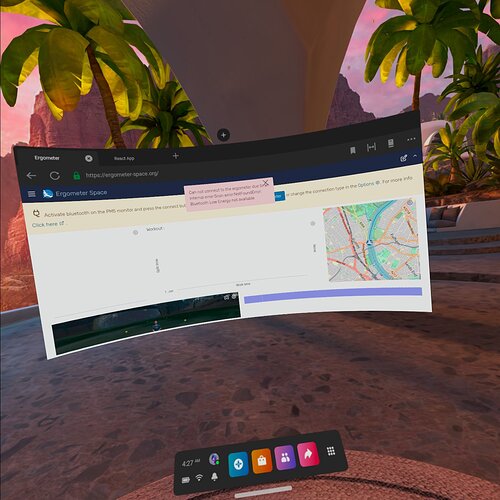

With that, I abandoned trying to do anything with the camera, but I made scene for you to see it for your selves. Camera Shake

The scene, is just a grid floor, but when you hold down the ‘A’ button of the right controller, I freeze any changes to the position & rotation of the sub-cameras. You now get a solid image. Granted, depending on the exact spot where you freeze, parts may not look good, but it is rock solid. Even when you run the scene on the desktop, some corners look bad at given camera locations. This scene is kind of a torture test for aliasing.

Making everything look fussy with a post process may help, but if multi-view is ever implemented, I think you might not be able to use it. To me that is going to be more important. Running with Babylon Native, once it runs on more than Hololens, might also give Unreal like results, since then OpenXR is directly being used, not through a browser.