Dear Babylon.js Team,

I’m currently using Babylon.js version 7.32.0 on a Meta Quest 2 device running build 69.0, with browser version 35.3. I wanted to inquire about the support for the latest WebXR features in Babylon.js. I couldn’t find all the information in the GitHub issues labeled VR/AR/XR (Issues · BabylonJS/Babylon.js · GitHub), so I decided to ask here.

I’ve noticed that multimodal input doesn’t seem to be fully functional yet. For example, if I start with two Quest controllers and set one aside, a hand doesn’t appear in place of the controller. Conversely, if I start with two hands and pick up a controller with my right hand, the controller appears but the left hand disappears.

According to Meta’s browser release notes (https://developers.meta.com/horizon/documentation/web/browser-release-notes):

WebXR Multimodal Input: If hands are enabled in WebXR, you can now get one hand and one controller. In addition, the non-primary inputs can now be tracked in the session’s trackedSources attribute.

Logitech MX Ink: WebXR support for new 6DoF pen controller with 2 buttons and 2 pressure sensitive areas

I’m expecting to receive the XR pen soon (MR Stylus for Meta Quest 3, Meta Quest 2 | Logitech). I would like to get multimodal input working so I can use hand tracking with my left hand for object manipulation and the pen in my right hand for more precise interactions. The pen was developed in collaboration with Meta and has received positive reviews. There’s a Three.js template available (GitHub - fcor/mx-ink-webxr: This template uses Three.js and WebXR to provide an immersive 3D painting experience using Logitech MX-INK.), but I couldn’t find a Babylon.js equivalent.

Additionally, the release notes mention:

WebXR: add experimental support for unbounded spaces

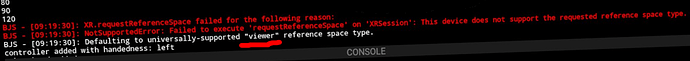

In AR, Meta’s boundaries appear unnecessarily, and objects added to the scene don’t stay in place when referenceSpaceType is set to “unbounded”.

WebXR: transform is now provided for the origin reset event

I’m not entirely sure of the implications, but it might be useful in certain scenarios.

WebXR: Depth sensing implementation has been updated to support the latest spec. Older implementations will continue to work with a warning.

There’s already an issue on GitHub regarding this. It could be beneficial for AR experiments.

I also read that body tracking support is coming to Babylon.js ([WebXR] Implement body tracking · Issue #14960 · BabylonJS/Babylon.js · GitHub). I’m curious about how this will work with multimodal input. Will both the body and controllers be rendered simultaneously, or only the controllers? What’s the current status of the body tracking implementation?

I’m interested in any updates on these new WebXR features and any other recent developments.

Thank you for your time, and I appreciate all the great work you’re doing with Babylon.js!

Best regards,

Tapani